Before You Install the Tech Stack, Build the Trust Stack

I was at VenTech last night—that monthly gathering where Santa Barbara’s tech community gets together, startups mingling with students and VCs, all of us trying to figure out what’s next. That’s where I ran into Guy Smith, and we go way back. Guy was a professor of English at Santa Barbara City College before he retired, the top-ranked community college in America. We worked together when he built SoMA, the School of Media Arts, back in my Wavefront days, and we’ve collaborated on several things over the years. The fact that he still shows up to these events matters.

The conversation turned to AI, which it always does these days. I felt my face light up because I couldn’t help it—this is what I do now with Coastal Intelligence, helping organizations actually implement AI in ways that work. Guy saw the shift in my expression, and his face went the other direction.

“AI is going to make all of us irrelevant,” he said.

There it was. The fear, raw and real.

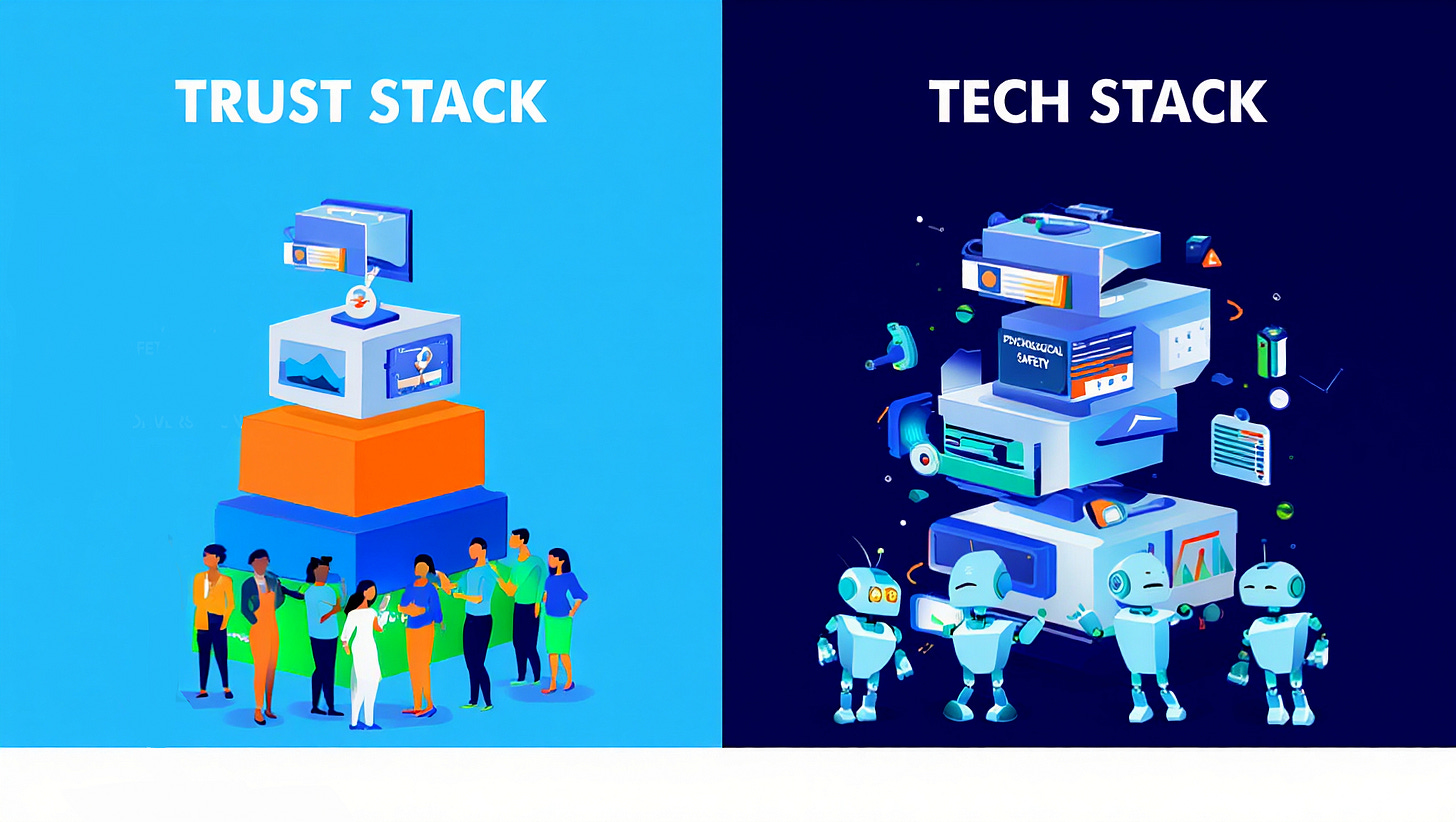

That’s when I said it: “Which is exactly why we focus on the Trust Stack instead of the Tech Stack.”

I could see him processing. Trust Stack? Tech Stack? What? I’d used buzzwords and caught myself, and had to explain quickly what I meant. But here’s the thing—I’ve been having this exact conversation for three weeks straight with different people, and it’s always the same fear, the same resistance, the same fundamental misunderstanding about what’s actually blocking AI adoption. Everyone’s talking about the tech stack, but nobody’s talking about the trust stack. That’s the problem.

The Industry Is Moving at Light Speed

The tech industry wants you to move fast, really fast. New AI tools every week, new capabilities every month, and an unprecedented race between OpenAI, Anthropic, Google, and Microsoft. They’re all shouting the same message: adopt now, implement faster, don’t get left behind. The VCs at VenTech said it explicitly—”The companies that move fastest will win.”

That makes sense from their perspective since they’ve got billions invested and they need adoption, scale, and speed. But here’s what they’re not saying: humans don’t work at light speed. We can’t. We’re not built that way.

Think about it. We spent two years in COVID learning to work from home, getting comfortable in our isolated spaces, learning to distrust physical proximity, and creating entire systems around distance. Then AI arrives and says, “Great news! You can stay home even more. You don’t need to connect face-to-face. Actually, you might not need to connect at all.” And we wonder why trust is eroding.

The Data Tells a Brutal Story

Here’s what nobody wants to talk about: 70 to 95 percent of AI implementations fail.[1] Not fail as in “the technology doesn’t work”—the technology works fine. They fail because people resist. MIT researched this, multiple studies confirm it: “The failure often has almost nothing to do with the technology itself.”[2]

Let me say that again because it matters. The technology isn’t the problem. The people are the problem, or more accurately, the lack of trust is the problem. Only 15 percent of employees believe their leaders have a clear AI strategy.[3] Seventy percent of challenges in AI projects stem from people and process issues, not technical ones.[4] High-trust organizations outperform low-trust organizations by 286 percent in total return to shareholders.[5]

The numbers don’t lie. This is a trust crisis masquerading as a technology problem.

The Three Faces of Job-Loss Fear

When I talk to people about AI—and I mean really talk, not just present to them—I hear the same three fears in different forms.

Fear One: “AI will replace me completely.”

This is the existential one, the “my entire career is about to become obsolete” fear. It’s not irrational when you’ve seen the headlines about tech companies laying off tens of thousands, about AI doing in seconds what took humans hours.

Fear Two: “I won’t learn fast enough, and someone else will.”

This is the competitive fear, and Scott Galloway nailed it: “You won’t lose your job to AI. You’ll lose your job to someone who knows how to use AI.”[6] That quote lands hard because it’s true. The threat isn’t the technology itself—it’s falling behind while others sprint ahead.

Fear Three: “My expertise suddenly doesn’t matter anymore.”

This is the identity fear. You spent years, maybe decades, building expertise. You became the person people turned to, building your value on what you know. Now AI can generate that same knowledge in seconds. So what are you?

These aren’t three separate fears—they’re three faces of the same core fear: loss of relevance, loss of security, loss of identity. And here’s what the tech industry doesn’t understand: you can’t train that fear away. You can’t PowerPoint your way past it. You can’t give someone a one-hour lunch-and-learn and expect them to embrace the thing that might make them obsolete. You have to build trust first.

The Trust Stack Parallel

In software development, a “tech stack” is simple—it’s the collection of technologies stacked on top of each other to build an application. Think of it like pancakes, where each layer adds functionality: an operating system at the bottom, a database on top of that, a programming language on top of that, and a user interface on top. Each layer builds on what’s beneath it. Engineers talk about stacks constantly: “What’s your stack?” “Are you a full-stack developer?” It’s the language of how things get built.

So when I say “Trust Stack,” I’m deliberately using the same metaphor. Before you can stack tools on top of tools, you need to stack trust on top of trust. Trust doesn’t happen all at once—it builds through small interactions, each adding to or taking away from it.

My friend Duey Freeman and I talk about this constantly. We have for years on the Elder Council show, and we still do. Trust is built one interaction, one conversation, one kept promise at a time. You can’t rush it, you can’t fake it, you can’t skip to the end. And that’s the fundamental tension: the tech industry says move at light speed, but humans need to move at trust speed.

Go Slow to Go Fast

I learned this on the mat in the dojo. Grandmaster Wheaton would teach us a new technique, and we’d try it immediately at full speed. He’d stop us every time. “Go slow to go fast,” he’d say. It wasn’t about being timid or lacking ambition—it was about laying the foundation so that speed would be possible later. You learn the movement slowly, understand the mechanics, and build the muscle memory. Then and only then can you execute at speed. The alternative is moving fast, doing it wrong, building bad habits, and having to unlearn everything later. That takes longer.

Stephen M.R. Covey wrote an entire book about this called “The Speed of Trust,” and his thesis is that nothing moves as fast as trust.[7] When trust is low, everything slows down because you need approvals, sign-offs, check-ins, verification—all of that creates what Covey calls a “trust tax,” the cost you pay for lack of trust. When trust is high, everything accelerates: people move without asking permission, make decisions, and take initiative. That’s the “trust dividend,” the benefit you gain from high trust.

The Irvine Company proved this when they transitioned 5,000 employees from Microsoft to Google Apps.[8] They didn’t rush it, didn’t mandate it, and didn’t do a big-bang rollout. They went slow to go fast by holding open conversations, running pilots, deploying “Google Guides”—super users who could help people one-on-one—and creating “Next Lounges” where people could touch and feel the technology. They treated it as a people project, not a technology project. It worked. Full adoption, minimal resistance. Why? Because they built trust before they deployed technology.

The Workshop Script

So what does trust-building actually look like in practice? At Coastal Intelligence, our workshops start with the Trust Stack—the leadership team plus key team members, and we’re brought in to facilitate the conversations before we talk about any AI tools. We start with the fear.

When someone says, “I’m afraid AI will replace me,” here’s what we say: “That fear is legitimate. Some roles will change—that’s real. But you’re in this room right now, which means you’re already ahead of most people in your field. The question isn’t ‘will AI replace me?’ The question is ‘how do I make sure I’m the one using AI, not the one being replaced by someone who learned it first?’”

Watch what happens. Shoulders drop, eyes engage, the defensive posture softens. Why? Because we didn’t dismiss the fear—we validated it, then reframed it from threat to agency. You’re not a victim of AI. You’re an early adopter. That’s trust-building, that’s the first layer of the stack.

From there, we create psychological safety, explicitly address job security, and get clear on where AI helps and where humans stay in control. We agree on one or two actual workflows where AI can make someone’s life easier this month—not in theory, in reality. By the end of day one, people stop resisting and start asking, “When do we get this?” That’s the Trust Stack working.

The Three-Stage Path

Here’s how Coastal Intelligence’s CoastalOS methodology works:

Stage One: Trust Stack

Make AI feel safe by addressing fear head-on, fostering psychological safety, and leaving with buy-in rather than resistance.

Stage Two: Tool Stack

Make AI useful on Monday by mapping one or two high-value workflows, curating a small tool stack, and training using actual work instead of theory to get quick wins.

Stage Three: Support Stack

Make it stick through ongoing coaching for AI champions, office hours for the team, check-ins as workflows evolve, and access to a community of other leaders implementing AI.

Notice the sequence: Trust first, tools second, support third. Not tools first and hope for the best. The difference is a 70-95 percent failure rate versus actual adoption that sticks.

The Contrarian Challenge

Everyone’s doing this backwards. The consultants show up and say, “Here’s the AI tool. Here’s the training. Here’s the implementation plan. Any questions?” Then they’re shocked when six months later, nobody’s using it. Meanwhile, they’ve spent hundreds of thousands on licensing, invested in training, and hired implementation partners. All of it wasted. Why? Because they skipped the trust foundation.

It’s like trying to build a house on sand. You can have the best materials, the best architects, the best construction crew, but if the foundation isn’t solid, the house falls down.

So here’s my challenge: Before you buy another AI tool, before you mandate another training session, before you hire another consultant to show your team how to write better prompts, ask three questions: Do my people trust this technology? Do my people trust me to lead them through this change? Do my people trust themselves to learn and adapt? If the answer to any of those questions is no, you don’t have a technology problem. You have a trust problem.

What This Means for You

You may be a business leader reading this, thinking about AI adoption. Start with trust. Really. Stop looking at tools for a minute, stop reading about what Claude can do, or what ChatGPT just launched. Talk to your team. Really talk. Ask them what scares them, listen without defending, and validate without dismissing. Build the Trust Stack first.

Maybe you’re someone who’s scared of AI. Good. That fear is information telling you something matters here—you care about your work, you care about your relevance. That’s not weakness, that’s human. But don’t let that fear freeze you. Use it, channel it, be the person in the room who learns this early instead of waiting until you have no choice.

You may be a consultant or trainer in this space. Stop selling tools and start building trust. If you can help organizations create psychological safety around AI, you’re offering something genuinely valuable. If you’re just teaching prompt engineering, you’re missing the point.

The technology will keep advancing, the tools will keep getting better, and the capabilities will keep expanding. But humans? We’ll keep being human. We’ll keep needing connection, needing trust, needing time to process and adapt. The winners in this AI transformation won’t be the ones who moved fastest—they’ll be the ones who built the strongest foundation. They’ll be the ones who understand that before you install the Tech Stack, you have to build the Trust Stack.

The Next Conversation

I pointed around the room at VenTech after Guy challenged me. “Events like this,” I said, “this is why these matter so much. We need more occasions where we get together and be human, because if we take COVID isolation and compound it with AI promising we never have to leave our homes again, we lose the human connection that makes trust possible.”

Guy nodded slowly. I could see him thinking it through. “The Trust Stack,” he said. “That’s actually a good framework.”

“Want to grab coffee and talk more about it?” I asked.

He did.

Because that’s how trust gets built. One conversation at a time, one coffee at a time, one interaction at a time. Layer by layer. Stack by stack.

If you’re reading this somewhere between San Luis Obispo and Thousand Oaks, this conversation might resonate with what you’re seeing in your own organization. My co-founders Jim Sterne, Mike Wald, and I at Coastal Intelligence would genuinely enjoy sitting down with you and your team—not to pitch anything, but to explore whether what we’re seeing matches what you’re experiencing. Sometimes the most valuable thing is just having the conversation. Coffee’s on us.

Mark Sylvester is co-founder of Coastal Intelligence, where he helps organizations implement AI through a trust-first methodology. When he’s not vibe-coding, he’s performing improv comedy with An Embarrassment of Pandas, producing TEDxSantaBarbara events, and helping his wife, Kymberlee Weil, change the world one talk at a time at StorytellingSchool.com.

This story is part of “Through Another Lens,” an exploration of ideas that challenge conventional thinking. Subscribe at [link] or listen to the podcast version with original musical score at [link].

References

[1] Forrester Research (2024). “The State of AI Adoption in Enterprises.” Multiple industry studies, including those by McKinsey & Company and Gartner, consistently report AI implementation failure rates of 70-95%.

[2] MIT Sloan Management Review and Boston Consulting Group (2023). “Expanding AI’s Impact with Organizational Learning.”

[3] PwC Global Artificial Intelligence Study (2024). “AI Jobs Barometer: Employee Confidence in AI Leadership.”

[4] Deloitte Insights (2024). “State of AI in the Enterprise, 5th Edition: Focus on People and Process Challenges.”

[5] Covey, Stephen M.R. and Rebecca R. Merrill. “The Speed of Trust: The One Thing That Changes Everything.” Free Press, 2006. Study data from Watson Wyatt research on trust and shareholder returns.

[6] Galloway, Scott. “The Prof G Pod” (2023). Multiple episodes discussing AI adoption and workforce transformation.

[7] Covey, Stephen M.R “The Speed of Trust: The One Thing That Changes Everything.” Free Press, 2006.

[8] Google Cloud Case Study (2012). “The Irvine Company: Cloud Transformation and Change Management.” Documented in multiple technology adoption research papers on change management best practices.